WARNING: This is the _old_ Lustre wiki, and it is in the process of being retired. The information found here is all likely to be out of date. Please search the new wiki for more up to date information.

Architecture - Epochs

Summary

In the following, an epoch is a collection of file system modifications, subject to certain constraints detailed below, that is used to merge distributed state updates (both data and meta-data) in a redundant cluster configuration. Typical (in fact, motivating) redundant configurations are

- WBC, where redundancy is between objects on servers, and their copies cached by client,

- meta-data replication, and

- (clustered) meta-data proxy servers.

Definitions

For the purpose of the following discussion let's separate out two components in the cluster:

- source

- a part of the cluster that caches some updated file system state, that is to be merged into destination. Source can be either a single client node (WBC case), a single server node (meta-data proxy), or a collection of server nodes (proxy cluster);

- destination

- a part of the cluster (server nodes) where updated state from the source is merged into. Destination can be either a next level proxy, or a home server;

There might be multiple sources and destinations in the single cluster, arranged in a tree-like pattern: WBC client is a source whose destination is a proxy server, that, in turn is a source for its master.

- file system consistency

- a file system is consistent when the state maintained on its servers, both on persistent and volatile storage together defines a valid name-space tree consistent with the inode/block allocation maps, and other auxiliary indices like FLD, SEQDB, OI, etc. File system consistency is only meaningful provided some notion of a logical time, making it possible to reason about collection of states across multiple nodes;

- operation

- an unitary modification of user-visible file system state, that transfers file system from one consistent state to another consistent state. Typical example of an operation is a system call;

- state update

- an effect of an operation on a state of an object it affects. Single operation can update state of multiple objects, possibly on multiple nodes;

- undo entry

- a record in a persistent server log that contains enough information to undo given state update even if it were partially executed, or not executed at all;

- epoch

- a collection of state updates over multiple nodes of a cluster, that is guaranteed to transfer source file system from one consistent state to another. Ultimate sources of epochs are:

- a WBC client: for a single WBC client epoch boundaries can be as fine grained as a single operation;

- a single meta-data server: when MDS executes operations on behalf of non-WBC clients, in packs state updates into epochs defined by the transactions of the underlying local file system;

- a cluster of meta-data servers: when non-WBC client issues meta-data operation against the cluster of meta-data servers, this operation is automatically included into an epoch defined by the Cuts algorithm running on the cluster;

Once epoch is formed it traverses through the proxy hierarchy, and can be merged with other epochs, but cannot, generally, be split into smaller pieces.

- epoch number

- a unique identifier of an epoch within particular source. For a single WBC client this can be as simple as an operation counter, incremented on every system call. For a single meta-data server, epoch number is tid (identifier of the last transaction committed to the local file system). For a proxy or replication cluster, epoch number is determined by the CUTs algorithm.

- batch

- a collection of state updates across multiple objects on the same source node, such that (A) if a batch contains an update from some epoch, it contains all updates from this epoch belonging to this node (i.e., batch contains no partial epochs), and (B) the source node holds exclusive locks over the objects in the batch (at the logical level that is: in the presence of NRS locks might be already released);

- epoch marker

- a special dummy record inserted in a batch that marks epoch boundary;

- sub-batch

- a set of state updates from the batch that are to be merged to the particular destination server. The batch is divided into the sub-batches;

- batch reintegration

- a process of sending a batch over the network from the source to the destinations, followed by execution of state updates in the batch;

- batch recovery

- a roll-forward of already reintegrated, but not committed batch in the case of destination failure;

- batch roll-back

- a roll-back of partially committed batch in the case of destination failure;

Qualities

- non-blocking : epoch boundary detection should not block;

- parallel : batch reintegration normally reintegrates sub-batches concurrently without server-server waits or rpcs. Server-to-server communication is acceptable only in the case of a failure of one of destination nodes;

- recoverable : batch reintegration can be recovered in the case of destination failure;

- generic : usable for WBC, replication, etc.

Discussion

In the following it is assumed that all operations in a cluster are coming from the sources that obey WBC locking protocol, that is, keep locks while state updates are reintegrated. To subsume traditional clients (that never cache write meta-data locks) in this picture, let's pretend that cluster is a (clustered) caching proxy for itself. That is, md server (or servers in CMD case) acts as a proxy: it receives operation requests from the non-WBC clients, takes and caches locks on client's behalf, executes operation, reintegrates state updates to itself, and defines epoch boundaries (either through tid in non-CMD case, or through CUTs algorithm running on the clustered meta-data servers).

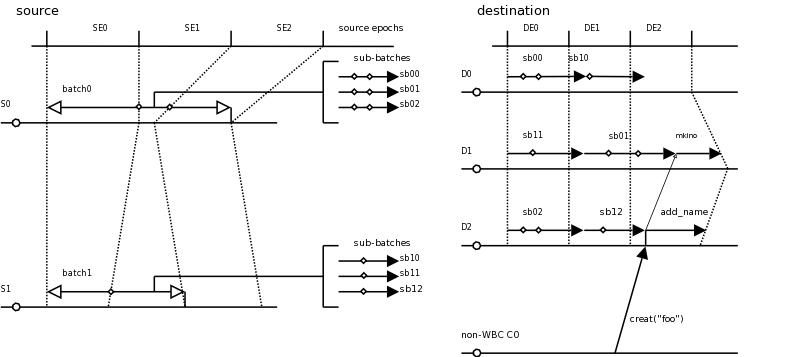

Note that epoch boundaries in the destination cluster DE{0,1,2} are independent of the epoch boundaries in the source cluster SE{0,1,2} (requiring proper epoch nesting would necessary add a synchronization between source and destination, as destination cluster would potentially need to wait for an incoming RPC from the source, before advancing to the next epoch), but compound operations, issued by non-WBC clients are aligned with the epoch boundaries.

Batch Reintegration

Client Side

- client decides to reintegrate a batch (either to cancel a lock, or as a reaction to memory pressure, or by explicit user request)

- client holds exclusive locks for all objects mentioned by state updates in the batch

- batch is divided into the sub-batches

- every sub-batches is sent in parallel to the corresponding destination server. Single sub-batch can be sent as a sequence of RPCs.

- multiple concurrent reintegrations are possible (and desirable)

- error handling during reintegration? There should be no errors, except for transient conditions like -ENOMEM

- locks can be released only after response from the server is received (modulo NRS induced re-ordering).

Server Side

- server receives a sub-batch and executes state updates in it sequentially. Whenever state update is executed, corresponding undo-record is inserted into the llog as a part of the same transaction

- server tracks the epoch markers, and remembers separately for each source what is the last epoch number that is committed

- multiple sub-batches from the different clients (from the same of different sources) can be executed concurrently, as locking guarantees that they do not contain conflicting state updates

- neither rep-acks nor rep-ack locks are needed for the reintegration

Recovery

(There is a proposal from Nathan to do optimistic roll-forward first, that we omit for the time being and the sake of simplicity.)

Recovery after the failure of one of the destination servers is handled in two phases: roll-back (server recovery), followed by roll-forward (client recovery)

- roll-back: after failed server rebooted, the following is repeated for every source: all destination servers agree on what is the last epoch for that source that is fully committed on all servers. All uncommitted and partially committed epochs are rolled-back by executing entries in undo-logs, up until the corresponding epoch marker (locking? Who takes locks to execute undo?). There can be no conflicts during undo at that point, as all later modifications are also lost (assuming local file system commits transactions in order). To wit: all uncommitted epochs are rolled-back. At the end of roll-back destination file system is in a consistent state.

- roll-forward: once all uncommitted epochs were rolled-back, clients starts replaying cached batches. This process also leaves destination file system in the consistent state, as batch is by definition a union of full epochs.

Garbage collection

Description of roll-forward phase above assumes that source nodes keep enough batches in memory to resume and finish roll-forward. Some mechanism is necessary to determine when it is safe for a node to discard cached batch from the memory. This mechanism looks different for the WBC and non-WBC sources.

For a WBC source it is safe to discard the batch from the memory once all its sub-batches where committed by the corresponding destination servers. Indeed, there are two cases:

- WBC source is a single client node. In that case, a batch is a union of full epochs, and once all its epochs is fully committed on all servers there is no way it can be rolled back.

- WBC source is a proxy or replication cluster. In this case epoch is spread over batches on multiple source nodes. If some of source nodes failed (in addition to the assumed failure of a destination node) there is no way to collect full epoch, and, hence, generally, no way to roll file system forward into consistent state. This means, that only roll-back phase is performed, and then all source nodes are evicted. Now consider the situation when all source nodes are available. In that case they replay all their cached batches, starting from the last batch not committed on the destination servers. No roll-back is necessary.

A non-WBC (traditional) client that doesn't cache locks, and uses server-originated operations in CMD case, doesn't maintain its own notion of an epoch, and relies on the global epoch determined by the CUTs algorithm running on the MD servers. Such client can discard cached batch (single RPC effectively), as soon as corresponding epoch is committed on all servers.

Similar (symmetrical even) garbage collection problem arises w.r.t. discarding undo logs on the destination servers. Strictly speaking, undo record can be discarded as soon as the corresponding source epoch is fully committed on all destination nodes (as this guarantees that this entry will be never needed during roll-back), but obvious optimization is to discard all undo-entries for a given source epoch only when this epoch is completely behind (in the per-server undo-log) of a certain destination epoch that is fully committed on all destination servers. In this case no new mechanism expect for already existing epoch boundary detector on the destination is necessary (at the expense of using more storage to keep undo-entries for a more time than absolutely necessary).