WARNING: This is the _old_ Lustre wiki, and it is in the process of being retired. The information found here is all likely to be out of date. Please search the new wiki for more up to date information.

Architecture - Server Network Striping: Difference between revisions

No edit summary |

m (Protected "Architecture - Server Network Striping" ([edit=sysop] (indefinite) [move=sysop] (indefinite))) |

(No difference)

| |

Revision as of 12:07, 22 January 2010

Note: The content on this page reflects the state of design of a Lustre feature at a particular point in time and may contain outdated information.

Summary

Server Network Striping (SNS) is Lustre-level striping of file data over multiple object servers with redundancy in a style similar to RAID disk arrays. It enhances the reliability and availability of the system with slight increase of redundant data but with the capability of tolerating the failure of single or multiple nodes. SNS will utilize the CMU RAIDframe subsystem to implement the rapid basic prototyping of the distributed networked RAID system.

Definitions

SNS Server Network Striping

Striped Object The collection of objects on multiple OSTs forming a striped object.

Chunk Object An logical or persistent object containing data and/or redundant data for a striped object. A striped object consists of its chunk objects.

Stripe Unit Minimal extent in chunk objects.

Full Stripe A collection of stripe units in chunk objects that maps 1-1 to an extent in striped object. (In RAIDframe, stripe is short for full stripe)

Partial truncate If the size after truncate is not full-stripe-size aligned in parity RAID pool, it is called partial truncate.

Pool A pool is a name associated with a set of OSTs in a Lustre clueter.

DAG Directed Acyclic Graph. RAIDframe uses DAGs to model RAID operations.

ROD Read Old Data.

ROP Read old Parity.

WND Write New Data.

WNP Write New Parity.

IOV Page (brw_page) vector.

Requirements

SNS Fundamentals

- SNS should base on RAIDframe and support different kinds of RAID geometries.

- SNS should provide the high availability with reasonable high performance meanwhile.

- SNS should be implemented as a separated module; SNS should be an optional layer: Lustre can work with or without it; SNS can support lov-less configuration and local mount.

- New client I/O interfaces should match the I/O interfaces of RAIDframe engine.

- New file layout format should adapt to various RAID patterns.

- Support to use mountconf to setup and configure SNS OBD.

- Extent locks covered the full stripe across several OSTs should not result cascading evictions.

- Handle grants/quota correctly.

- Handle file metadata information (e.g. file size) with redundancy correctly in both normal and degraded case.

- Support retry of failed I/O in DAG executing.

SNS Detailed Recovery

Number of possible failures have to be addressed:

- Client crashes in a middle of stripe write.

- OST(s) crash or power off

- OST(s) experience I/O error, disk failure

- Network failure occures in communication between Client(s) and one or more OSTs containing stripes.

To handle the failures gracefully, there are following requirements to the process of failure detection and the recovery procedure:

- CUT preserves striped object consistency. CUT can't contain inconsistent stripe writes

- OST is able to rollback incomplete stripe writes in case of client crash

- I/O error may be handled by switching the object to degraded mode

- striped object recovery may continue even if some stripe sites are not available by switching the object to degraded mode

- object consistency constraints apply to the process of client redo-log replaying

- RAID on-line recovery (degraded -> normal)

SNS Architecture Overview

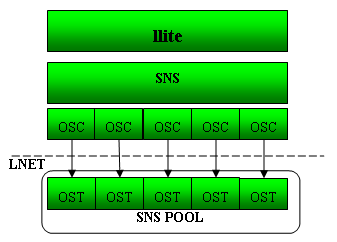

SNS is implemented as a separated module. Similar with LOV, each SNS is a new kind OBD and functions to various OSTs in the SNS pool via the underneath OSCs but can appears as a single target to llite.

SNS can stack under llite directly or upon local disks/OBDs directly to support local mount.

Moreover, file objects in SNS pool can have various RAID patterns such as RAID0, RAID1, RAID5, declustering parity, etc.

SNS write as a distributed transaction

SNS write operation is a distributed transaction when several stripes on different servers get updated.

A simple approach is to let the client issue all write requests to the data servers. The client starts the transaction and communicates directly with the servers. In terms of Lustre transactions the client is the originator and the OSTs are replicators.

Unfortunately clients have no persistent storage (at least we assume so), it makes impossible resusing of lustre transactions with redo-logging at originator side.

Undo-logging at OSTs side is a solution for the problem. Undo operation can be triggered by a timeout, if client doesn't close the transaction correctly in specified period of time.

Undo logging at OST side

Llogs with undo records are transactionally updated together with each OST write op. There is a trick which allows undo records to be small. Modify write puts data to a new block and adds undo record about old data location. Append is recorded in llog as a file size increase.

RAIDFrame limitations

- all needed stripe locks should be acquired before DAG executing and released after

- there is no built-in support for metadata consistency (for example, file size) in RAIDFrame

- page-aligned i/o

- RF wants to know stripe state (number and location of failed chunks) for constructing correct DAG, there is a problem to keep the state up-to-date across network

metadata redundancy

- striped object size is stored with each chunk object

- for each stripe, failed chunks info is stored with each chunk objects

DAG creation

Achieving consistency in DAG creation procedure.

A client creates a DAG based on cached information about striped file. If a DAG doesn't match current stripe state, OST replies with an error to the client asking to re-create correct DAG.

I/O components

RAIDframe requires that I/O submitting to RAIDframe engine must be contiguous and sector-size (page-size) aligned. New I/O layer and interfaces should match I/O characteristic of RAIDframe engine and simplify the integration into SNS.

The I/O path and components of SNS is shown in the graph blew:

Common I/O path and components with/without SNS

- read: Initialize the generic client page cl_page of various layers; Form an IOV in llite layer and submit to underlayer via cl_submit_iov.

- readahead: Add readahead pages into IOV via the interface cl_add_page which is similar with 'bio_add_page' in linux kernel used to check whether it already forms an optimal IOV or is accross the stripe buoundry, etc; And then submit the IOV to underlayer via cl_submit_iov.

- directIO: Almost same as the original path, form an IOV and submit to underlayer.

- writepage: Check whehter it's out of grants/quota or hitting the dirty max of the object/OBD. if so, starts to write out pages to update quota/grants; Otherwise, page manager queues the dirty page and cache it on client.

- Page manager: Page manager is used to cache and manage generic cl_page of various layers. In the new client I/O layering, page manager can be implemented in various layers theoretically. There is an import functionality of page manager - Form IOVs: once find a good IOV can be built after accumulate enough dirty pages queued by page manager, it will form one and submit to next I/O component to achieve max. throughput; Once out of grants/quota or hit the dirty max of the object/OBD, it starts to batch dirty pages to build IOVs and submit to next I/O component.

- OSC I/O scheduler: It is an optional component. To utilize the network bandwidth efficiently, OSC should build RPC with max. RPC size, which needs an I/O scheduler together with the timer and IOV queue in OSC to delay and merge I/O requests especially when good IOVs are divided by RAIDframe into sub (small) IOVs to various chunk objects. Similar with linux I/O scheduler, IOVs from various objects are queued into the scheduler. With its help, it can merge small IOVs from different objects into a big I/O RPC; Moreover, via adjustment of unplug expire period it can implement local traffic control combined with Network Request Scheduler (NRS) according to the feedback from the server.

OSC I/O scheduler

OSC I/O scheduler can be implemented in OSC or as a separate module. It has the following features:

- Each OSC OBD has an optional I/O scheduler together with two separate IOV queues for read and write respectively.

- The peroid of timer expiration of schduler for read/write can be tunned.

- The IOVs are queued in IOV queues and preferentially merged according to the chunk objects.

- The OSC I/O scheduler processes IOVs in the list to build I/O RPC when,

- Once there are enough IOVs in the queue to consist a max-size RPC.

- On the unplug timer expireation.

- Triggered by upper layer for fsync/sync or sync_page operations.

SNS I/O components

SNS contains three I/O components: RAIDframe, page manager, RF-OSC I/O wrapper.

- RAIDframe uses DAGs to model RAID operations. After received the contiguous IOV issued from upper layer or page manager, RAIDframe engine builds DAGs which contains various kinds of DAG nodes such as ROD, ROP, WND, WNP, XOR, C, etc, and then execute the DAGs. (See RAIDframe: Rapid Prototyping for Disk Arrays in detail)

- Page manager: In the configuration without SNS, the page manager is implemented in OSC layer, and it just simply chains the dirty pages into the list of corresponding chunk object; While in SNS it is much complext. RAIDframe configured with parity pattenr perfers full stripe write to reach good perfromance, the dirty page is managed according to the stripe index it resides to batch full-stripe IOV eadily.

- The functions of RF-OSC I/O wrapper is to make sub IOVs (ROD, ROP, WND or WNP generated by RAIDframe engine) to the underlayer.

- By reusing the layout interfaces in RAIDframe, SNS implements the functionalities such as offset converting, size calculation, etc.

Lock protocols

To maintain consistency during updating parity, it should serialize locking for full-stripe extent. But full-stripe locks are accross multiple OSTs. serialized locking will lead ascading evictions and locking per OST in parallel (Lock, I/O unlock operations to each OST parallel) can not maintain the atomicity of parity update.

To meet the requirement, it introduces master lock. The protocols is shown as follow:

- In client SNS layer, extend the exent of lock to full-stripe-size aligned.

- Full-stripe lock is divided into G sub stripe-unit locks to various chunk objects. There are two candicates for master lock: the sub Lock L for parity stripe unit P which resides on OST A and sub Lock L' of stripe unit with index (P + 1) % G which resides on OST B.

- Client sends stripe-unit locks parallelly.

- Client waits acquiring master lock from OST A.

- If acquirement of Lock L fails, client retries to acquire remastering Lock L' from OST B.

- When receive the remastering lock request, OST B checks the status of OST A. If find OST A is still active, OST B replies client with the indication that OST A is still alive, client will retry to acquire master lock from OST A or report error immediately (maybe occur network partition failure: net link between client<->OST A fails but net link between OSTA<->OSTB works well.); If OST A indeed occurs failure such as power off, etc, OST B enqueues the lock and records that it's remastered from OST A, and then replies to client to notify that the lock is remastered to OST B.

- If succeed to acquire the master lock, client starts the following I/O operations.

- When OST A powers back on, other OSTs in the pool remaster the locks back to OST A.

(Not consider the complex failure such as network partition.)

There is once a proposal that acquire the locks for data as normal, acquire locks for parity only when update parity during sync (short period). But in degraded case of read, we still face the problem of locking of full stripe.

Use cases

SNS fundamentals cases

QASs

| id | quality attribute | summary |

|---|---|---|

| Extent_lock | Usability & Availability | Avoid cascading evictions as locks accorss multiple OSTs. |

| parity_grants_quota | Usability | grants/quota for parity. |

| Partial_truncate | Consistency & recovery | Parity consistency and recovery for partial truncate |

| Metadata_recovery | recovery | OSD metadata recovery in degraded case. |

| IO_retry | Usability & recovery | Filed read I/O . |

| Obj_alloc | Usability | Object allocation in SNS pool. |

| SNS_configure | Usability | Configure the SNS device via mountconf |

Extent lock

| Scenario: | Client tries to acquire extent lock for I/O. | |

| Business Goals | Maintain the consistency during parity updating and read in degraded case, avoid cascading evictions | |

| Relevant QA's | Usability & Availability | |

| details | Stimulus | extend the lock with full-stripe granularity, lead locking accorss multiple OSTs. |

| Stimulus source | Other conflict clients crash or reboot, network failure | |

| Environment | Conflict with other clients | |

| Artifact | Lock requests | |

| Response | Choose a OST one of chunk objects resides as the master lock server. Consistency of concurrent access is maintained by the choosen lock server, I/O can start just afer acquire lock from the master lock server; lock requests to other OSTs are used to update the lvb and metadata information such as file size, access time, etc; When lock blocking AST takes places on a stripe unit of a chunk object, lock manager on client writes out pages in the corresponding full stripe. | |

| Response measure | ||

| Questions | How to handle the case of failure of Master lock server? Choose another master lock server via election? | |

| Issues | For declustering parity, we can always choose the server parity stripe unit of full stripe resides as master lock server; But it will make the DLM remaster more complex. | |

grants/quota for parity

| Scenario: | Client tries to cache a dirty page on client. | |

| Business Goals | Provide space grants/quota for client cache (both data and parity). | |

| Relevant QA's | Usability | |

| details | Stimulus | Client writes to a file. |

| Stimulus source | Client | |

| Environment | Parity is redundant and not part of file data; | |

| Artifact | Grants/quota | |

| Response | Client checks whether the page is out of grants/quota of the corresponding OSC; SNS checks grants/quota for the parity at the same time as every data page has a corresponding parity. | |

| Response measure | If one of them fails, client starts to write out pages to update grants/quota. | |

| Questions | In parity RAID, it can tolerate one point failure included poweroff, destory, using up of space of OST, etc, furthermore parity management on client is complex, thus can we use some smart strategies that only acquiring grants/quota for the data but not for parity. | |

| Issues | ||

Partial truncate

| Scenario: | Client truncates a file. | |

| Business Goals | Maintain parity consistency after truncate operations | |

| Relevant QA's | Usability | |

| details | Stimulus | Client truncates a file. |

| Stimulus source | Client. | |

| Environment | File is striping with parity pattern. It's partial truncate; | |

| Artifact | Parity consistency. | |

| Response | SNS sends the punch requests to various chunk objects touched the truncate operations; SNS updates the parity stripe unit of the last full stripe after truncate. (two phase: punch, parity update) | |

| Response measure | ||

| Questions | ||

| Issues | inconsistency recovery: client updates the parity of last full stripe, but fails to sends the punch requests, leading consistent problem. The same problem exists If first sends the punch requests and then update the parity. The proposed solution is: clients logs the truncate operations in the frist phase; When receive the parity update request, OST cancels the punch logs. | |

Metadata recovery in degraded case

| Scenario: | Client tries to get file attributes. | |

| Business Goals | Get the correct file metadata information even in degraded case | |

| Relevant QA's | Usability | |

| details | Stimulus | Client gets file attributes such as file size, access time, etc. |

| Stimulus source | Client | |

| Environment | Pool in degraded case; | |

| Artifact | Metadata recovery | |

| Response | In normal case, client stores metadata information of chunk objects touched client access into the EA of corresponding parity objects for redundancy. | |

| Response measure | In degraded case, recovery the metadata information of invalid chunk object via the redundant information in other valid chunk objects. And then get metadata information (file size, access time, etc) of file by merging the information in various chunk objects. | |

| Questions | ||

| Issues | For write it will update at least two chunk objects (data and corresponding parity); But for read it may update only one chunk object touched the protion of read data, thus there's an issue that how to store the atime into at least two chunk objects for redundancy. | |

Failed I/O retry

| Scenario: | DAG node for read fails. | |

| Business Goals | Retry the failed read I/O. | |

| Relevant QA's | Usability. | |

| details | Stimulus | the execution of some I/O DAG nodes (such as ROD, ROP, WND, WNP, etc) failed. |

| Stimulus source | failure of OST or network. | |

| Environment | OSTs or network occur failure during DAG executing. | |

| Artifact | Retry the failed I/O. | |

| Response | the RAIDframe engine reconfigures the OBD arrays and thier state. | |

| Response measure | RAIDframe in SNS creates a new DAG to execute in degraded case to retry the failed I/O. | |

| Questions | ||

| Issues | ||

Object allocation

| Scenario: | Client creates a new file. | |

| Business Goals | Allocate the object for the new created file in the dedicated pool. | |

| Relevant QA's | Usability. | |

| details | Stimulus | Client creates a new file or set the stripe meatadata of the file via IOCTL. |

| Stimulus source | Client. | |

| Environment | Client, MDS. | |

| Artifact | Object allocation. | |

| Response | MDS alocates the chunk objects in the pool, stores the file layout metadata into the EA of the file and replies to client with it. | |

| Response measure | ||

| Questions |

| |

| Issues | ||

Configure the SNS device via mountconf

| Scenario: | Client configures the SNS OBD via mountconf | |

| Business Goals | Simplify the processes that user configures and setups the SNS via mountconf. | |

| Relevant QA's | Usability. | |

| details | Stimulus | Client mounts the lustre file system. |

| Stimulus source | User. | |

| Environment | Client, MDS. | |

| Artifact | Setup SNS device via mountconf. | |

| Response | Client gets configuration log via llog from MGS and Configure the SNS device and layout of RAIDframe via SNS log enties | |

| Response measure | ||

| Questions | Is the configuration of pool for mountconf still by llog? | |

| Issues | ||

Failure and recovery cases

QASs

| id | quality attribute | summary |

|---|---|---|

| client_goes_offline | recovery | client crashes doing i/o |

| ost_goes_down | recovery | OST goes down |

| ost_delay_online | recovery | OST goes online with a delay |

| RAID_rebuild | recovery | an agent does striped object rebuild online |

| RAID_rebuild_crash | recovery | agent or OSTs crahed during striped object rebuild |

client goes offline

| Scenario: | client crashes in the middle of stripe write | |

| Business Goals | resistibility to client crashes | |

| Relevant QA's | Availability | |

| details | Stimulus | OST detects that client went offline |

| Stimulus source | сlient crash or reboot, network failure | |

| Environment | client or network failures | |

| Artifact | striped object consistency | |

| Response | OSTs restore the object consistency after a failed write | |

| Response measure | ||

| Questions | a way to detect incompleted write | |

| Issues | ||

OST goes down

| Scenario: | OST crashes or reboots | |

| Business Goals | resistibility to server crashes | |

| Relevant QA's | Availability | |

| details | Stimulus | Client tries to reconnect to the OST after it reboots |

| Stimulus source | server crash or reboot, network | |

| Environment | server or network failures | |

| Artifact | cluster fs state | |

| Response | OST rolls its state to the CUT and applies client logs | |

| Response measure | ||

| Questions | how does CUT preserve striped object consistency? | |

| Issues | ||

OST goes online with a delay

| Scenario: | OST goes online after a delay | |

| Business Goals | Efficient OST re-integration | |

| Relevant QA's | Availability & Stability | |

| details | Stimulus | degraded object state check, attempt to start RAID recovery agent |

| Stimulus source | server crash or reboot, network failure | |

| Environment | server or network failures | |

| Artifact | recovery agent | |

| Response | striped object cleanup or synchronization | |

| Response measure | striped object state | |

| Questions | ||

| Issues | ||

RAID online recovery (rebuild)

| Scenario: | OST goes online after a delay | |

| Business Goals | restore object's availability | |

| Relevant QA's | Availability | |

| details | Stimulus | sync or async object state check, attempt to start a recovery agent |

| Stimulus source | server crash or reboot, network failure | |

| Environment | Lustre fs with obect in degraded mode | |

| Artifact | recovery agent | |

| Response | striped object online recovery | |

| Response measure | striped object state | |

| Questions | ||

| Issues | recovery of recovering process state | |

OST crash during striped object rebuild

| Scenario: | recovering of online raid recovery process | |

| Business Goals | restore object's availability | |

| Relevant QA's | Availability | |

| details | Stimulus | recovery agent finds incomleted object recovery |

| Stimulus source | server crash or reboot, network failure | |

| Environment | server or network failures | |

| Artifact | recovery agent | |

| Response | completing striped object online recovery | |

| Response measure | striped object state | |

| Questions | ||

| Issues | ||