WARNING: This is the _old_ Lustre wiki, and it is in the process of being retired. The information found here is all likely to be out of date. Please search the new wiki for more up to date information.

Architecture - HSM Migration

Note: The content on this page reflects the state of design of a Lustre feature at a particular point in time and may contain outdated information.

Purpose

This page describes use cases and high-level architecture for migrating files between Lustre and a HSM system.

Definitions

- Trigger

- A process or event in the file system which causes a migration to take place (or be denied).

- Coordinator

- A service coordinating migration of data.

- Agent

- A service used by coordinators to move data or cancel such movement.

- Mover

- The userspace component of the agent which copies the file between Lustre and the HSM storage.

- Copy tool

- HSM-specific component of the mover. (May be the entire mover.)

- I/O request

- This term groups read requests, write requests and other metadata accesses like truncate or unlink.

- Resident

- A file whose working copy is in Lustre.

- Release

- A released file's data has been removed by Lustre after being copied to the HSM. The MDT retains metadata info for the file.

- Archive

- An archived file's data resides in the HSM. File data may or may not also reside in Lustre. The MDT retains metadata info for the file.

- Restore

- Copy a file from the HSM back into Lustre to make an Archived file Resident.

- Prestage

- An explicit call (from User or Policy Engine) to Restore a Released file

Use cases

Summary

| id | quality attribute | summary |

|---|---|---|

| Restore | availability | If a file is accessed from the primary storage system (Lustre), but resides in the backend storage system (HSM) it can be relocated in the primary storage system and made available. |

| Archive | availability, usability | The system can copy files from the primary to the backend storage system. |

| access-during-archive | performance, usability | When a file is migrating and is accessed, the migration may be aborted. |

| component-failure | availability | If a migration component suffers a failure. The migration is resumed or aborted depending on the migration state and the failing component. |

| unlink | availability | When a Lustre object is deleted, the MDTs or the OSTs request the needed removal from the HSM when needed, depending on policy. |

Restore (aka cache-miss aka copyin)

| Scenario: | File data is copied from a HSM into Lustre transparently | |

| Business Goals: | Automatically provides filesystem access to all files in the HSM | |

| Relevant QA's: | Availability | |

| details | Stimulus: | A released file is accessed |

| Stimulus source: | A client tries to open a released file, or an explicit call to prestage | |

| Environment: | Released and restored files in Lustre | |

| Artifact: | Released file | |

| Response: | Block the client open request. Start a file transfer from the HSM to Lustre using the dedicated copy tool. As soon as the data are fully available, reply to the client. Tag the file as resident. | |

| Response measure: | The file is 100 % resident in Lustre, the open completes without error or timeout. | |

| Questions: | None. | |

| Issues: | None. | |

Archive (aka copyout)

| Scenario: | Some files are copied to the HSM from Lustre. | |

| Business Goals: | Provides a large capacity archiving system for Lustre transparently. | |

| Relevant QA's: | Availability, usability. | |

| details | Stimulus: | Explicit request |

| Stimulus source: | Administrator/User or policy engine. | |

| Environment: | Candidate files from the policy. | |

| Artifact: | Files matching the policy. | |

| Response: | The coordinator, receiving the request, starts a transfer between Lustre and the HSM for selected files. A dedicated agent is called and will spawn the copy tool to manage the transfer. When the transfer is completed, the Lustre file is tagged as Archived and Not Dirty on the MDT. | |

| Response measure: | Policy compliance. | |

| Questions: | None. | |

| Issues: | None. | |

access-during-archive

| Scenario: | The file being archived is accessed during the archival process. | |

| Business Goals: | Optimize file accesses. | |

| Relevant QA's: | Usability. | |

| details | Stimulus: | File access on migrating file. |

| Stimulus source: | A process on any client opens a file or requests some specific actions. | |

| Environment: | File currently undergoing archival. | |

| Artifact: | File metadata. | |

| Response: | Files that are modified during archival must at no point be marked as up-to-date in the HSM until it is copied completely and coherently. | |

| Response measure: | Policy engine must re-queue file for archival. MDT disallows release of the file. | |

| Questions: | None. | |

| Issues: | None. | |

component-failure

| Scenario: | A transfer component fails during a migration. | |

| Business Goals: | Interrupted jobs should be restarted. Filesystem and HSM must remain coherent. | |

| Relevant QA's: | Availability. | |

| details | Stimulus: | A component peer reaches a timeout exchanging data with a specific component. |

| Stimulus source: | Any component failure (client, MDT, agent). | |

| Environment: | Lustre components used for file migration. | |

| Artifact: | One component could not be reached anymore. | |

| Response: | On client failure, the migration is finished anyway. On MDT/coordinator failure, a recovery mechanism using persistent state must be applied. On agent failure, the archive process is aborted and the coordinator will respawn it when necessary. On OST failure, the archive process is delayed and managed like a traditional I/O. | |

| Response measure: | The system is coherent, no data transfer process is hung. | |

| Questions: | None. | |

| Issues: | None. | |

unlink

| Scenario: | A client unlinks a file in Lustre. | |

| Business Goals: | Do not limit Lustre unlink speed to HSM speed. | |

| Relevant QA's: | Availability. | |

| details | Stimulus: | A client issues a unlink request on a file in Lustre. |

| Stimulus source: | A Lustre client. | |

| Environment: | A Lustre filesystem with objects archived in the HSM. | |

| Artifact: | A file archived in the HSM. | |

| Response: | The file is unlinked normally in Lustre. For each Lustre object removed this way, an unlink request is sent to the coordinator for the corresponding removal. This should be asynchronous and may be delayed by a large time period. | |

| Response measure: | No file object exists anymore in Lustre or the archive. | |

| Questions: | None. | |

| Issues: | None. | |

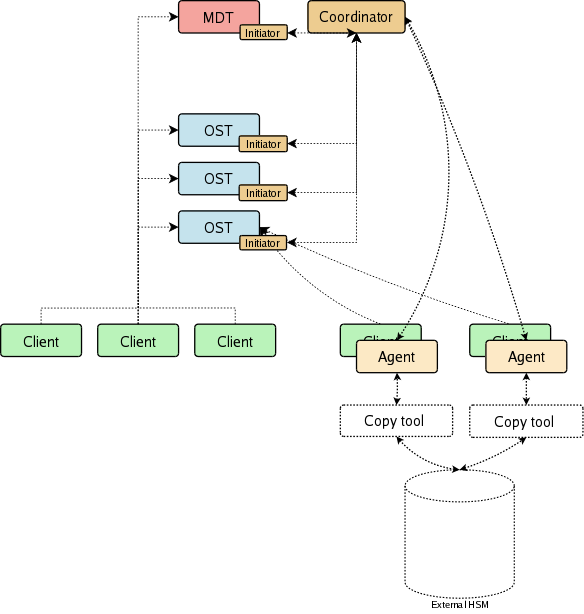

Components

Coordinator

- dispatches requests to agents; chooses agents

- restore <FIDlist>

- archive <FIDlist>

- unlink <FIDlist>

- abort_action <cookie>

- consolidates repeat requests

- re-queues requests to a new agent if an agent becomes unresponsive (aborts old request)

- agents send regular progress updates to coordinator (e.g. current extent)

- coordinator periodically checks for stuck threads

- coordinator requests are persistent

- all requests coming to the coordinator are kept in llog, cancelled when complete or aborted

- kernel-space service, MDT acts as initiator for copyin

- ioctl interface for all requests. Initiators are policy engine, administrator tool, or MDT for cache-miss.

- Location: a coordinator will be directly integrated with each MDT

- Agents will communicate via MDC

- Connection/reconnection already taken care of; no additional pinging, config

- Client mount option will indicate "agent", connect flag will inform MDT

- MDT already has intimate knowledge of HSM bits (see below) and needs to communicate with coordinator anyhow

- HSM comms can use a new portal and reuse MDT threads.

- Coordinators will handle the same namespace segment as each MDT under CMD

MDT changes

- Per-file layout lock

- A new layout lock is created for every file. The lock contains a layout version number.

- Private writer lock is taken by the MDT when allocating/changing file layout (LOV EA).

- The lock is not released until the layout change is complete and the data exist in the new layout.

- The MDT will take group extent locks for the entire file. The group ID will be passed to the agent performing the data transfer.

- The current layout version is stored by the OSTs for each object in the layout.

- Shared reader locks are taken by anyone reading the layout (client opens, lfs getstripe) to get the layout version.

- Anyone taking a new extent lock anywhere in the file includes the layout version. The OST will grant an extent lock only if the layout version included in the RPC matches the object layout version.

- lov EA changes

- flags

- hsm_released: file is not resident on OSTs; only in HSM

- hsm_exists: some version of this fid exists in HSM; maybe partial or outdated

- hsm_dirty: file in HSM is out of date

- hsm_archived: a full copy of this file exists in HSM; if not hsm_dirty, then the HSM copy is current.

- The hsm_released flag is always manipulated under a write layout lock, the other flags are not.

- flags

- new ioctls for HSM control:

- HSM_REQUEST: policy engine or admin requests (archive, release, restore, remove, cancel) <FIDlist>

- HSM_STATE_GET: user requests HSM status information on a single file

- HSM_STATE_SET: user sets HSM policy flags for a single file (HSM_NORELEASE, HSM_NOARCHIVE)

- HSM_PROGRESS: copytool reports periodic state of a single request (current extent, error)

- HSM_TAPEFILE_ADD: add an existing archived file into the Lustre filesystem (only metadata is copied).

- changelogs:

- new events for HSM event completion

- restore_complete

- archive_complete

- unlink_complete

- per-event flags used by HSM

- setattr: data_changed (actually mtime_changed for V1)

- archive_complete: hsm_dirty

- all HSM events: hsm_failed

- new events for HSM event completion

Agent

An agent manages local HSM requests on a client.

- one agent per client max; most clients will not have agents

- consists of two parts

- kernel component receives messages from the coordinator (LNET comms)

- agents and coordinator piggyback comms on MDC/MDT: connections, recovery, etc.

- coordinator uses reverse imports to send RPCs to agents

- userspace process copies data between Lustre and HSM backend

- will use special fid directory for file access (.lustre/fid/XXXX)

- interfaces with hardware-specific copytool to access HSM files

- kernel component receives messages from the coordinator (LNET comms)

- kernel process passes requests to userspace process via socket

Copytool

The copytool copies data between Lustre and the HSM backend, and deletes the HSM object when necessary.

- userspace; runs on a Lustre client with HSM i/o access

- opens objects by fid

- may manipulate HSM mode flags in an EA.

- uses ioctl calls on the (opened-by-fid) file to report progress to MDT. Note MDT must pass some messages on to Coordinator.

- updates progress regularly while waiting for HSM (e.g. every X seconds)

- reports error conditions

- reports current extent

- copytool is HSM-specific, since they must move data to the HSM archive

- version 1 will include tools for HPSS and SAM-QFS

- other, vendor-proprietary (binary) tools may be wrapped in order to include Lustre ioctl progress calls.

Policy Engine

- makes policy decisions for archive, release (which files and when)

- policy engine will provide the functionality of the Space Manager and any other archive/release policies

- may be based on space available per filesystem, OST, or pool

- may be based on any filesystem or per-file attributes (last access time, file size, file type, etc)

- policy engine will therefore require access to various user-available info: changelogs, getstripe, lfs df, stat, lctl get_param, etc.

- normally uses changelogs and 'df' for input; rarely is allowed to scan filesystem

- changelogs are available to superuser on Lustre clients

- filesystem scans are expensive; allowed only at initial HSM setup time or other rate events

- the policy engine runs as a userspace process; requests archive and release via file ioctl to coordinator (through MDT).

- policy engine may be packaged separately from Lustre

- the policy engine may use HSM-backend specific features (e.g. HPSS storage class) for policy optimizations, but these will be kept modularized so they are easily removed for other systems.

- API can pass an opaque arbitrary chunk of data (char array, size) from policy engine ioctl call through coordinator and agent to copytool.

Configuration

- policy engine has it's own external configuration

- coordinator starts as part of MDT; tracks agents registrations as clients connect

- connect flag to indicate agent should run on this MDC

- mdt_set_info RPC for setting agent status using 'remount'

Scenarios

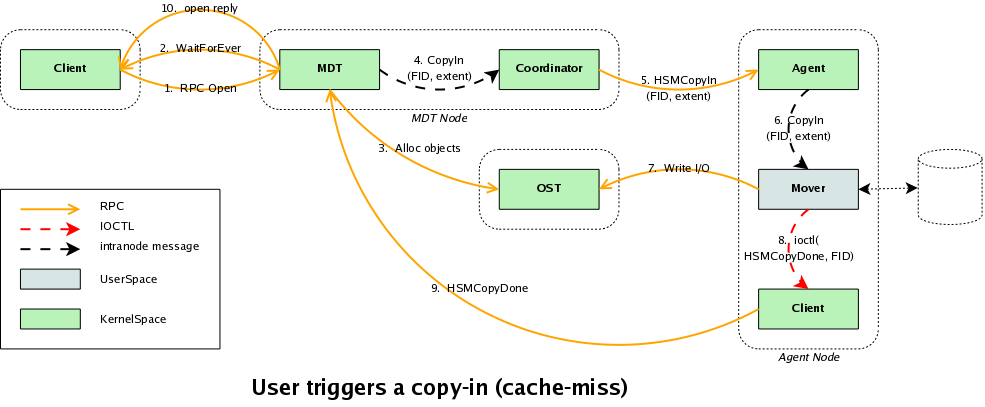

restore (aka cache-miss, copyin)

Version 1 ("simple"): "Migration on open" policy

Clients block at open for read and write. OSTs are not involved.

- Client layout-intent enqueues layout read lock on the MDT.

- MDT checks hsm_released bit; if released, the MDT takes PW lock on the layout

- MDT creates a new layout with a similar stripe pattern as the original, increasing the layout version, and allocating new objects on new OSTs with the new version.

- (We should try to respect specific layout settings (pool, stripecount, stripesize), but be flexible if e.g. pool doesn't exist anymore.

- Maybe we want to ignore stripe offset and/or specific OST allocations in order to rebalance.)

- MDT enqueues group write lock on extents 0-EOF

- Extents lock enqueue timeout must be very long while group lock is held (need proc tunable here)

- MDT releases PW layout lock

- Client open succeeds at this point, but r/w is blocked on extent locks

- MDT sends request to coordinator requesting restore of the file to .lustre/fid/XXXX with group lock id and extents 0-EOF. (Extents may be used in the future to (a) copy in part of a file, in low-disk-space situations; (b) copy in individual stripes simultaneously on multiple OSTs.)

- Coordinator distributes that request to an appropriate agent.

- Agent starts copytool

- Copytool opens .lustre/fid/<fid>

- Copytool takes group extents lock

- Copytool copies data from HSM, reporting progress via ioctl

- When finished, copytool reports progress of 0-EOF and closes the file, releasing group extents lock.

- MDT clears hsm_released bit

- MDT releases group extents lock

- This sends a completion AST to the original client, who now receives his extents lock.

- MDT adds FID <fid> HSM_copyin_complete record to changelog (flags: failed)

Version 2 ("complex"): "Migration on first I/O" policy

Clients are able to read/write the file data as soon as possible and the OSTs need to prevent access to the parts of the file which have not yet been restored.

- getattr: attributes can be returned from MDT with no HSM involvement

- MDS holds file size[*]

- client may get MDS attribute read locks, but not layout lock

- Client open intent enqueues layout read lock.

- MDT checks "purged" bit

- MDT creates a new layout with a similar stripe pattern as the original, allocating new objects on new OSTs with per-object "purged" bits set.

- MDT grants layout lock to client and open completes

- ?Should we pre-stage: MDT sends request to coordinator requesting copyin of the file to .lustre/fid/XXXX with extents 0-EOF.

- client enqueues extent lock on OST. Must wait forever.

- check OST object is marked fully/partly invalid

- object may have persistent invalid map of extent(s) that indicate which parts of object require copy-in

- access to invalid parts of object trigger copy-in upcall to coordinator for those extents

- coordinator consolidates repeat requests for the same range (e.g. if entire file has already been queued for copyin, ignore specific range requests??)

- ? group locks on invalid part of file block writes to missing data

- clients block waiting on extent locks for invalid parts of objects

- OST crash at this time will restart enqueue process during replay

- coordinator contacts agent(s) to retrieve FID N extents X-Y from HSM

- copytool writes to actual object to be restored with "clear invalid" flag (special write)

- writes by agent shrink invalid extent, periodically update on-disk invalid extent and release locks on that part of file (on commit?)

- note changing lock extents (lock conversion) is not currently possible but is a long-term Lustre performance improvement goal.

- client is granted extent lock when that part of file is copied in

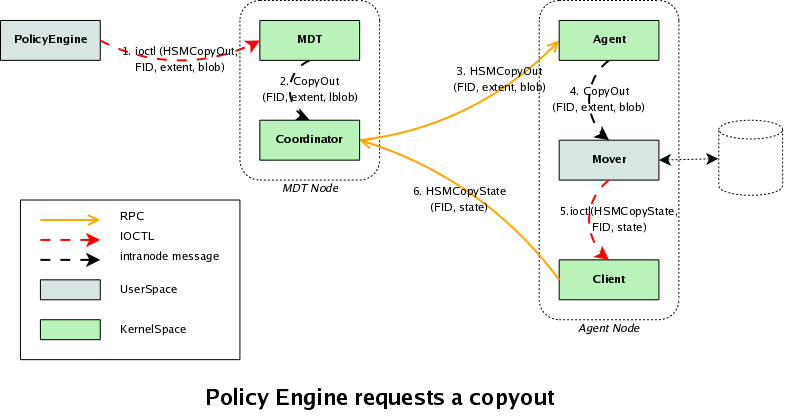

copyout

- Policy engine (or administrator) decides to copy a file to HSM, executes HSMCopyOut ioctl on file

- ioctl caught by MDT, which passes request to Coordinator

- coordinator dispatches request to mover. Request includes file extents (for future purposes)

- normal extents read lock is taken by mover running on client

- mover sends "copyout begin" message to coordinator via ioctl on the file

- coordinator/MDT sets "hsm_exists" bit and clears "hsm_dirty" bit.

- "hsm_exists" bit is never cleared, and indicates a copy (maybe partial/out of date) exists in the HSM

- coordinator/MDT sets "hsm_exists" bit and clears "hsm_dirty" bit.

- any writes to the file cause the MDT to set the "hsm_dirty" bit (may be lazy/delayed with mtime or filesize change updates on MDT for V1).

- file writes need not cancel copyout (settable via policy? Implementation in V2.)

- mover sends status update to coordinator via periodic ioctl calls on the file (e.g % complete)

- mover sends "copyout done" message to coordinator via ioctl

- coordinator/MDT checks hsm_dirty bit.

- If not dirty, MDT sets "copyout_complete" bit.

- If dirty, coordinator dispatches another copyout request; goto step 3

- MDT adds FID X HSM_copyout_complete record to changelog

- Policy engine notes HSM_copyout_complete record from changelog (flags: failed, dirty)

(Note: files modifications after copyout is complete will have both copyout_complete and hsm_dirty bits set.)

purge (aka punch)

V1: full file purge

- Policy engine (or administrator) decides to purge a file, executes HSMPurge ioctl on file

- ioctl handled by MDT

- MDT takes a write lock on the file layout lock

- MDT enques write locks on all extents of the file. After these are granted, then no client has any dirty cache and no child can take new extent locks until layout lock is released. MDT drops all extent locks.

- MDT verifies that hsm_dirty bit is clear and copyout_complete bit is set

- if not, the file cannot be purged, return EPERM

- MDT marks the LOV EA as "purged"

- MDT sends destroys to the OST objects, using destroy llog entries to guard against object leakage during OST failover

- the OSTs should eventually purge the objects during orphan recovery

- MDT drops layout lock.

V2: partial purge

Partially purged files hopefully allows graphical file browsers to retrieve file header info or icons stored at the beginning or end of a file. Note: determine exactly which parts of a file that Windows Explorer reads to generate it's icons

- MDT sends purge range to first and last objects, and destroys to all intermediate objects, using llog entries for recovery.

- First and last OSTs record purge range

- When requesting copyin of the entire file (first access to the middle of a partially purged file), MDT destroys old partial objects before allocating new layout. (Or: we keep old first and last objects, just allocate new "middle object" striping - yuck.)

unlink

- A client issues an unlink for a file to the MDT.

- The MDT includes the "hsm_exists" bit in the changelog unlink entry

- The policy engine determines if the file should be removed from HSM

- Policy engine sends HSMunlink FID to coordinator via MDT ioctl

- ioctl will be on the directory .lustre/fid

- or perhaps on a new .lustre/dev/XXX where any lustre device may be listed, and act as stub files for handling ioctls.

- ioctl will be on the directory .lustre/fid

- The coordinator sends a request to one of its agent for the corresponding removal.

- The agent spawns the HSM tool to do this removal.

- HSM tool reports completion via another MDT ioctl

- Coordinator cancels unlink request record

- In case of agent crash, unlink request will remain uncancelled and coordinator will eventually requeue

- In case of coordinator crash, agent ioctl will proceed after recovery

- Policy engine notes HSM_unlink_complete record from changelog (flags: failed)

abort

- abort dead agent

- the coordinator must send an abort signal to an agent to abort a copyout/copyin if it determines the migration is stuck/crashed. The coordinator can then re-queue the migration request elsewhere.

- dirty-while-copyout

- If a file is written to while it is being copied out, the copyout will have an incoherent copy in some cases.

- We could send abort signal, but:

- If a filesystem has a single massive file that is used all the time, it will never get backed up if we abort.

- Not a problem if just appending to a file

- Most backup systems work this way with relatively little harm.

- V1: don't abort this case

- V2: abort in this case is a settable policy

Recovery

MDT crash

- MDT crashes and is restarted.

- The coordinator recreates its migration list, reading the its llog.

- The client, when doing its recovery with the MDT, reconnects to the coordinator.

- Copytool eventually sends its periodic status update for migrating files (asynchronously from reconnect).

- As far as the copytools/agent is concerned, the MDT restart is invisible.

Note: The migration list is simply the list of unfinished migrations which may be read from the llog at any time (no need to keep it in memory all the time, if there are many open migration requests).

Logs should contain:

- fid, request type, agent_id (for aborts)

- if the list is not kept in memory: last_status_update_time, last_status.

Client crash

- Client stops communicating with MDT

- MDT evicts client

- Eviction triggers coordinator to re-dispatch immediately all of the migrations from that agent

- For copyin, it is desireable that any existing agent I/O is stopped

- Ghost client and copytool may still be alive and communicating with OSTs, but not MDT. Can't send abort.

- Taking file extent locks will only temporarily stop ghost.

- It's not so bad if new agent and ghost are racing trying to copyin the file at the same time.

- Regular extent locks prevent file corruption

- The file data being copied in is the same

- Ghost copyin may still be ongoing after new copyin has finished, in which case ghost may

overwrite newly-modified data (data modified by regular clients after HSM/Lustre think copyin is complete.)

Copytool crash

Copytool crash is different from a client crash, since the client will not get evicted

- Copytool crashes

- Coordinator notices no status updates

- Coordinator sends abort signal to old agent

- Coordinator re-dispatches migration

Implementation constraints

- all single-file coherency issues are in kernel space (file locking, recovery)

- all policy decisions are in user space (using changelogs, df, etc)

- coordinator/mover communication will use LNET

- Version 1 HSM is a simplified implementation:

- integration with HPSS only

- depends on changelog for policy decisions

- restore on file open, not data read/write

- HSM tracks entire files, not stripe objects

- HSM namespace is flat, all files are addressed by FID only

- Coordinator and movers can be reused by (non-HSM) replication

HSM Migration components & interactions

Note: for V1, copyin initiators are on MDT only (file open).

For further review/detail

- "complex" HSM roadmap

- partial access to files during restore

- partial purging for file type identification, image thumbnails, ??

- integration with other HSM backends (ADM, ??)

- How can layout locks be held in liblustre